Big Bird

Last updated:

Created:

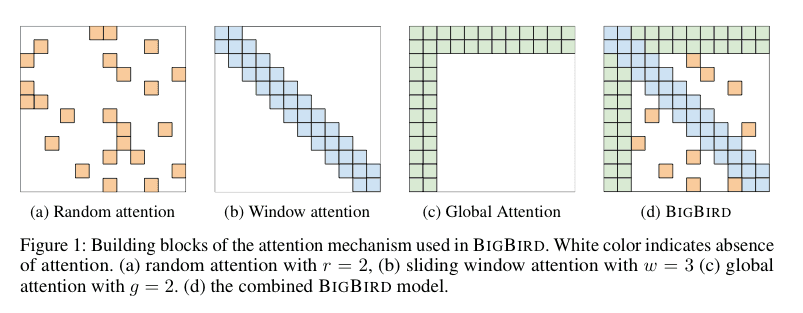

Big Bird (2020) similarly proposed a combination of global and local attention. But they also had each token attend to a random selection of tokens from elsewhere in the sequence. Each token attending to tokens instead of tokens meant that the complexity is merely instead of .

Big Bird attention

The paper includes quite an involved existence proof that this sparse pattern preserves the theoretical expressiveness of full attention, being both Turing-complete and a universal approximator of sequence functions.

Empirically, the model was able to handle sequences 8x longer than previous models on the same hardware.

Tags: AI